Machine learning, a subset of artificial intelligence, has revolutionized numerous aspects of our daily lives. From enhancing speech recognition to predicting traffic patterns and detecting online fraud on a large scale, its impact is undeniable. At the heart of many of these advancements lies computer vision, a field empowered by machine learning, enabling machines to “see” and interpret the visual world as humans do.

The effectiveness of any computer vision model hinges significantly on the quality and precision of its training data. This data is primarily composed of annotations applied to images, videos, and other visual content.

Image annotation, or photos annotation as it is often referred to, is essentially the process of labeling images to meticulously outline and define the characteristics of the data at a human-understandable level. This labeled data becomes the cornerstone for training machine learning models. The accuracy of these models, especially in computer vision tasks, is directly correlated to the quality of the annotated data.

what is image annotation

what is image annotation

This comprehensive guide will delve into everything you need to know about photos annotation, providing the knowledge to make informed decisions for your projects and business needs. We will address the following key questions:

- What exactly is photos annotation?

- What are the essential components for annotating photos effectively?

- What are the different types of photos annotation?

- What are the common techniques used in photos annotation?

- How are companies leveraging photos annotation?

- What are the typical use cases for photos annotation?

- Conclusion: Summarizing the importance of photos annotation.

Delving into Photos Annotation: Definition and Purpose

Photos annotation, also known as image annotation, is the meticulous process of labeling images to train artificial intelligence (AI) and machine learning (ML) models. This process typically involves human annotators who utilize specialized image annotation tools to label images or tag relevant information. This might include assigning categories to different objects within an image, drawing boundaries around specific items, or highlighting key features. The resulting output, often termed structured data, is then inputted into a machine learning algorithm, effectively “training” the model.

Consider an example where annotators are tasked with labeling all vehicles within a diverse set of images. This annotated data can then be used to train a model capable of recognizing and detecting vehicles. The model can learn to differentiate vehicles from other elements on the road, such as pedestrians, traffic signals, and potential obstacles, which is crucial for applications like autonomous navigation.

Autonomous driving systems are a prime example of how photos annotation powers computer vision. The applications are vast and continuously expanding. We will explore more use cases later, but first, let’s understand the fundamental requirements before embarking on a photos annotation project.

Essential Components for Effective Photos Annotation

While the specific requirements may vary depending on the project, certain core components are fundamental to the success of any photos annotation endeavor. These building blocks include diverse and representative images, skilled and trained annotators, and a robust annotation platform.

Diverse and Representative Images

Training a machine learning algorithm to make accurate predictions necessitates a substantial volume of images – often hundreds, if not thousands. The greater the number of independent images, and the more diverse and representative they are of real-world conditions, the better the resulting model will be.

Imagine training a security camera system to identify criminal activity or suspicious behavior. To create a reliable model, you would require images of the target environment captured from various angles and under different lighting conditions. Sometimes, it’s also important to isolate specific elements within an image and remove distracting backgrounds. Tools like background remover online can be helpful in streamlining this preprocessing step.

security images in different conditions

security images in different conditions

Ensuring your image dataset encompasses a wide range of scenarios and conditions is critical to guaranteeing the precision and reliability of your prediction results.

Trained and Skilled Annotators

A team of well-trained and professionally managed annotators is essential for driving a photos annotation project to successful completion. Establishing a rigorous Quality Assurance (QA) process and maintaining clear communication between the annotation service team and project stakeholders are paramount for effective project execution. Providing annotators with clear and comprehensive annotation guidelines is also a data labeling best practice. This helps to minimize errors early in the process, before the data is used for model training.

Regular feedback is also crucial for an effective QA process. Create an environment where annotators feel comfortable asking questions and seeking clarification when needed. Providing detailed feedback, especially regarding edge cases, can significantly improve annotation quality and consistency.

A Suitable Annotation Platform

A functional and user-friendly annotation tool is the backbone of any successful photos annotation project. When selecting an annotation platform, ensure it provides the necessary tools to handle your specific use cases.

Consider the features that are critical for your project. Does the platform offer collaborative annotation capabilities? Are there tools for quality management and progress tracking? Perhaps you need specific annotation types or functionalities within the editor. Inquire about these needs with the platform providers. An integrated management system and robust quality control processes are vital for monitoring project progress and ensuring data quality.

Technical support is also a key consideration. Choose a platform that offers comprehensive documentation and responsive technical support, ideally with a 24/7 support team. This ensures that you can address any technical issues promptly and keep your project on track. Leading companies in the industry often choose platforms like SuperAnnotate for their photos annotation needs due to their robust features and reliable support.

User-Centric Quality

An efficient photos annotation platform should be designed to minimize errors and inconsistencies in the annotated data. Ideally, it should facilitate remote user management while also providing tools for quality assessment and review of annotators’ work.

An advanced annotation platform should proactively detect and reduce human error, while also enhancing productivity by automating complex annotation tasks. This combination of error prevention and efficiency is crucial for delivering high-quality annotated data in a timely manner.

Exploring Different Types of Photos Annotation

Moving forward, let’s explore the various categories of photos annotation that are commonly encountered. While these types are distinct in their essence, they are not mutually exclusive. Combining different annotation types can often significantly enhance the accuracy of your machine learning models.

different types of image annotation

different types of image annotation

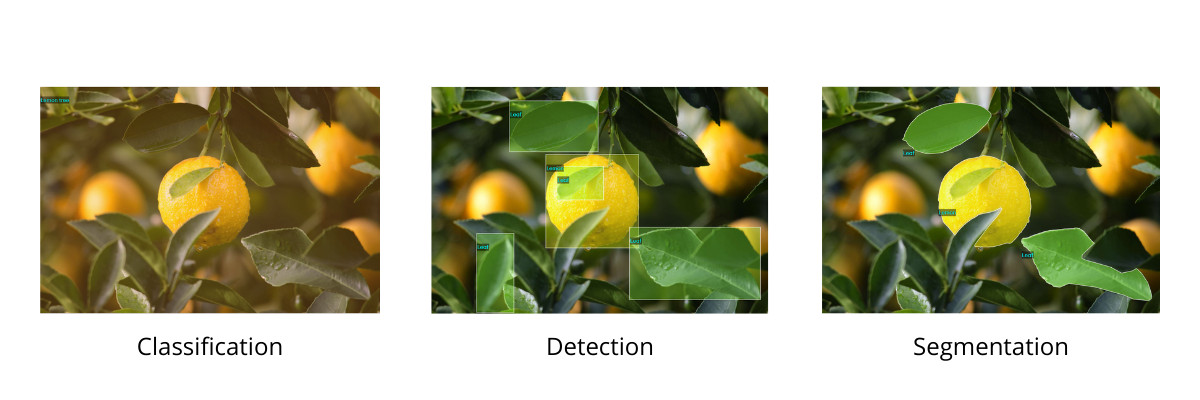

Image Classification

Image classification is a fundamental annotation task focused on understanding the overall content of an image. It involves assigning a single label to the entire image, categorizing it into a specific class. The goal is to identify and categorize the primary subject or theme of the image, rather than focusing on individual objects within it. Image classification is typically applied to images where a single, dominant object or scene is present.

classified dataset

classified dataset

Object Detection

In contrast to image classification, object detection focuses on identifying and localizing specific objects within an image. It involves assigning labels to individual objects of interest and determining their precise location within the image frame. Object detection not only identifies the “what” (the object) but also the “where” (its location).

detecting oranges

detecting oranges

For computer vision object detection tasks, you have the option to train your own object detector using your annotated images or leverage pre-trained detectors. Popular approaches for object detection include Convolutional Neural Networks (CNNs), Region-based CNNs (R-CNNs), and YOLO (You Only Look Once) architectures.

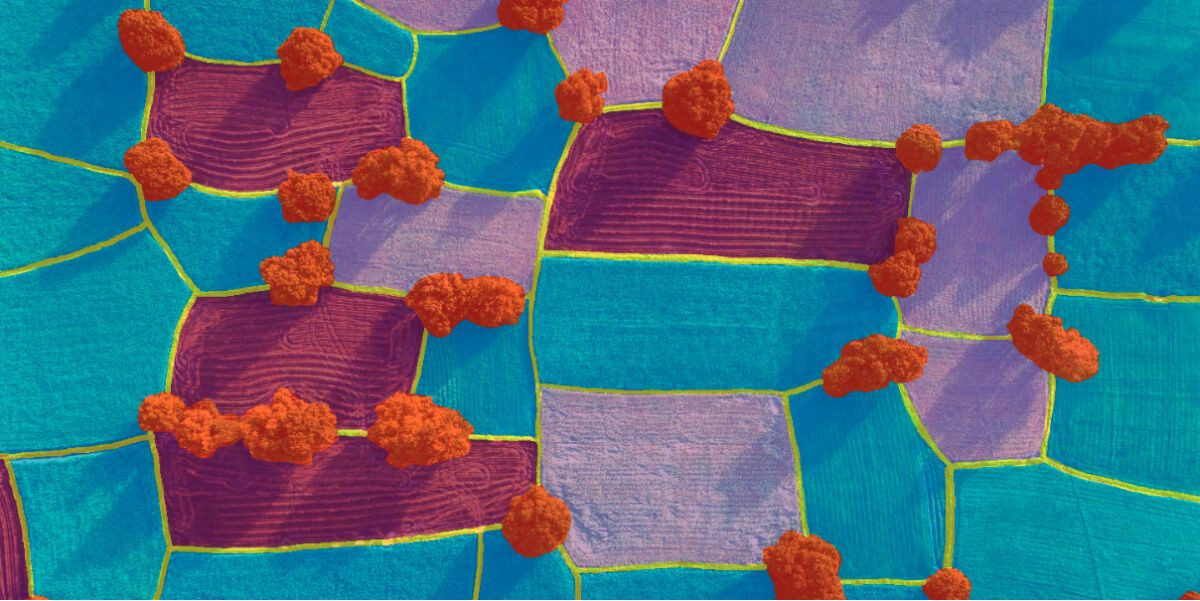

Segmentation: Pixel-Level Precision

Segmentation takes image analysis a step further, offering pixel-level precision in object identification. This method involves partitioning an image into multiple segments and assigning a label to each segment. Essentially, it’s pixel-by-pixel classification and labeling.

Segmentation is used to accurately delineate object boundaries and margins within images. It is commonly employed for complex tasks that demand high precision in differentiating objects and regions. Segmentation is considered a pivotal task in computer vision and can be further categorized into three sub-types: semantic segmentation, instance segmentation, and panoptic segmentation.

Semantic Segmentation: Classifying Every Pixel

Semantic segmentation involves dividing an image into meaningful regions or clusters and assigning a class label to every pixel within each cluster. It’s a pixel-level prediction task where every pixel in the image is assigned to a specific class. There are no pixels left unclassified in semantic segmentation.

In essence, semantic segmentation is the process of classifying each pixel in an image to understand the scene at a granular level, differentiating between various object categories and background regions.

Instance Segmentation: Differentiating Individual Objects

Instance segmentation is a computer vision task focused on detecting and delineating individual instances of objects within an image. It’s a specialized form of image segmentation that not only identifies object categories but also distinguishes between separate instances of the same object type.

Instance segmentation is highly relevant in modern machine learning applications, finding use in areas like autonomous vehicles, agriculture, medical imaging, and surveillance. It identifies the existence, location, shape, and count of individual objects. For example, instance segmentation can be used to count the number of people in an image, differentiating each person as a separate instance.

Semantic vs. Instance Segmentation: Key Differences

Semantic segmentation and instance segmentation are often confused, so let’s clarify their differences with an example.

semantic vs instance segmentation

semantic vs instance segmentation

Consider an image containing three dogs that need to be annotated. In semantic segmentation, all dogs would be assigned to the same “dog” class, treating them as a single, unified entity. In contrast, instance segmentation would identify each dog as a separate instance, even though they share the same “dog” label. Each dog would be segmented and labeled individually.

Instance segmentation is particularly valuable when it’s necessary to track or analyze individual objects of the same type separately. This detailed object-level understanding makes instance segmentation one of the more complex yet powerful segmentation techniques.

Panoptic Segmentation: Unifying Semantic and Instance Segmentation

Panoptic segmentation combines the strengths of both instance and semantic segmentation. It aims to classify every pixel in an image (semantic segmentation) while also identifying the specific object instance to which each pixel belongs (instance segmentation). In panoptic segmentation, every pixel is assigned both a semantic class label and an instance ID.

In our dog example, panoptic segmentation would classify all dog pixels as “dog” (semantic segmentation) and also differentiate each dog as a separate instance (instance segmentation). A key characteristic of panoptic segmentation is that every pixel in the image is assigned an exclusive label corresponding to a specific instance, meaning instances do not overlap.

Common Photos Annotation Techniques

Numerous photos annotation techniques exist, each suited to different use cases and data types. Understanding the most common techniques is crucial for determining the best approach for your project and selecting the appropriate annotation tools.

Bounding Boxes: Rectangular Object Localization

Bounding boxes are a widely used technique for drawing rectangular boxes around objects in images. They are effective for annotating objects with relatively symmetrical shapes, such as vehicles, furniture, or packages.

image annotation with bounding boxes

image annotation with bounding boxes

Bounding box annotation is essential for training algorithms to detect and locate objects. The autonomous vehicle industry heavily relies on bounding boxes to annotate pedestrians, traffic signs, and other vehicles, enabling self-driving cars to navigate roads safely. Cuboids, which are three-dimensional bounding boxes, are also used for more complex spatial understanding.

Bounding boxes simplify the task for algorithms to identify objects and associate them with their learned functions, making them a fundamental technique in object detection.

Polylines: Annotating Linear Features

Polylines are a straightforward annotation technique, along with bounding boxes, used to annotate linear features such as roads, lane markings, sidewalks, and wires. Polylines are created by connecting a series of points (vertices) with straight lines. They are particularly effective for outlining shapes of structures like pipelines, railway tracks, and roads.

As you might expect, polylines are crucial for training AI-powered vehicle perception models, allowing autonomous vehicles to accurately track road layouts and navigate complex road networks.

Polygons: Precise Edge Definition

Polygons are used to annotate the outlines of objects with irregular or asymmetrical shapes, such as buildings, vegetation, and landmarks. Polygon annotation involves precisely tracing the object’s edges by selecting a series of x and y coordinates along its boundary.

polygon annotation

polygon annotation

Polygon annotation is frequently used in object detection and recognition models due to its flexibility and ability to achieve pixel-perfect labeling. Polygons can capture more complex shapes and angles compared to bounding boxes. Annotators have the freedom to adjust polygon vertices to accurately represent an object’s shape, making polygons a technique that closely resembles image segmentation in its precision.

Key Points: Feature-Specific Annotation

Key points, also known as landmarks or points of interest, are used to annotate very specific locations or features on an object. Common applications include annotating facial features (eyes, nose, mouth), body parts, and key poses. When applied to a human face, key points can precisely pinpoint the location of eyes, nose, and mouth.

key point annotation

key point annotation

Key point annotation is widely used for security applications, enabling computer vision models to rapidly recognize and differentiate human faces. This capability makes key points essential for facial recognition systems, emotion detection, biometric identification, and other similar use cases.

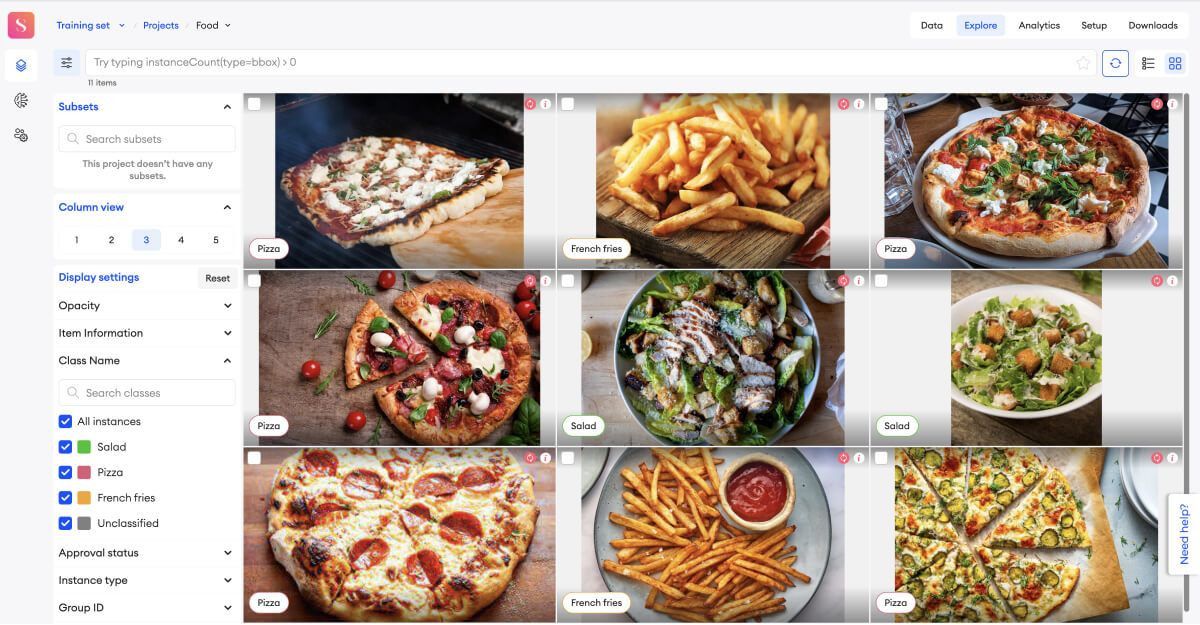

How Companies Approach Photos Annotation

Photos annotation represents a significant investment in AI initiatives, requiring resources, time, and budget. Carefully consider your project scope, budget constraints, and required delivery time when deciding how to manage your photos annotation project.

companies doing image annotation

companies doing image annotation

Here are three common approaches companies adopt for photos annotation:

In-House Annotation

One option is to manage your photos annotation project using internal resources. You can utilize an in-house team of annotators or even perform the annotation yourself for small-scale or experimental projects.

If you have an in-house annotation team, implementing a robust QA process is crucial. In this scenario, you bear full responsibility for data quality. Proper training, clear instructions, and expert guidance are essential for your annotators to minimize errors and maintain high labeling quality. If rapid annotation with consistent high quality is a priority, outsourcing might be a more effective option.

Outsourcing Photos Annotation

Outsourcing photos annotation to specialized service providers can offer high-quality results delivered on time. When choosing an outsourcing partner, carefully vet potential providers to ensure they employ well-trained, experienced, and professionally managed annotators. Conducting a pilot project is a good practice to evaluate their performance and ensure the results align with your project goals.

For projects requiring specialized domain expertise, such as medical image annotation with DICOM images needing expert medical annotators, verify that the outsourcing team possesses the necessary subject matter expertise. Platforms like SuperAnnotate offer specialized annotation services and prioritize data security. To explore if outsourcing is the right fit for you, consider requesting a free pilot project.

Crowdsourcing Annotation

If resource constraints are a primary concern, crowdsourcing can be considered for photos annotation projects. Crowdsourcing solutions for computer vision and data labeling can be cost-effective and time-saving, especially for large-scale projects. However, a potential drawback of crowdsourcing is inconsistent quality control. If you opt for crowdsourcing, ensure you establish clear communication channels and implement rigorous quality control measures to maintain data accuracy.

Common Use Cases for Photos Annotation

Photos annotation is instrumental in developing technologies that are integrated into our daily routines. Its applications span a wide spectrum, from simple tasks like facial recognition unlocking smartphones to complex robotics across diverse industries.

Let’s explore some prevalent use cases for photos annotation:

Facial Recognition: Security and Convenience

As previously mentioned, photos annotation is fundamental to the development of facial recognition technology. It involves annotating images of human faces using key points to identify facial features and differentiate individuals.

face recognition

face recognition

Facial recognition technology is becoming increasingly ubiquitous in various sectors, including access control for mobile devices, personalized retail experiences, security and surveillance systems, and beyond.

Security and Surveillance: Enhancing Public Safety

Another significant application of photos annotation is in security and surveillance systems. It’s used to train models to detect objects of interest like suspicious packages and identify questionable behaviors. Photos annotation has greatly benefited public safety by enhancing capabilities such as crowd monitoring, night vision surveillance, facial identification for crime investigation, and burglary detection.

security and surveillance

security and surveillance

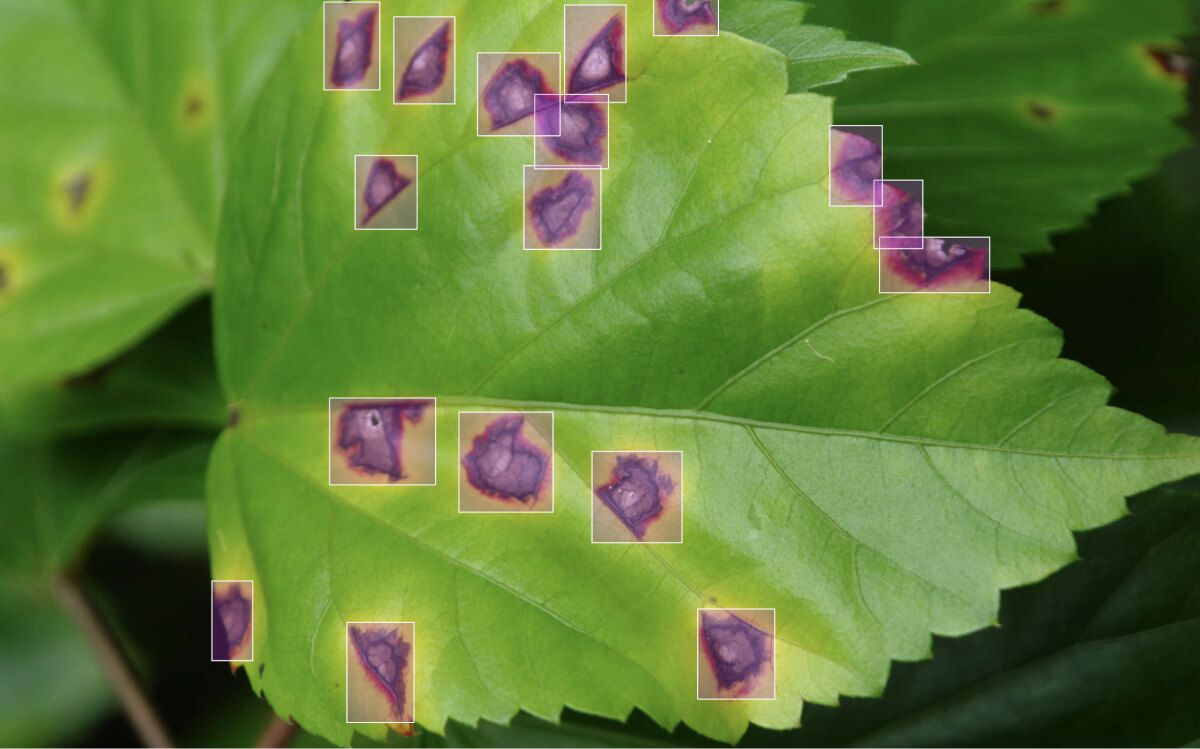

Agriculture Technology: Precision Farming

Agriculture technology relies heavily on photos annotation for various tasks, including detecting plant diseases. This is achieved by annotating images of both healthy and diseased crops. Monitoring crop growth rates, a critical factor for maximizing harvests, is also facilitated by photos annotation, providing farmers with timely insights into growth patterns across large areas.

agriculture technology

agriculture technology

This technology saves farmers time and resources and helps detect potential issues like soil nutrient deficiencies, water shortages, pest infestations, and toxicity in early stages. AI-powered agriculture technology can also assess fruit and vegetable ripeness, leading to more profitable harvests.

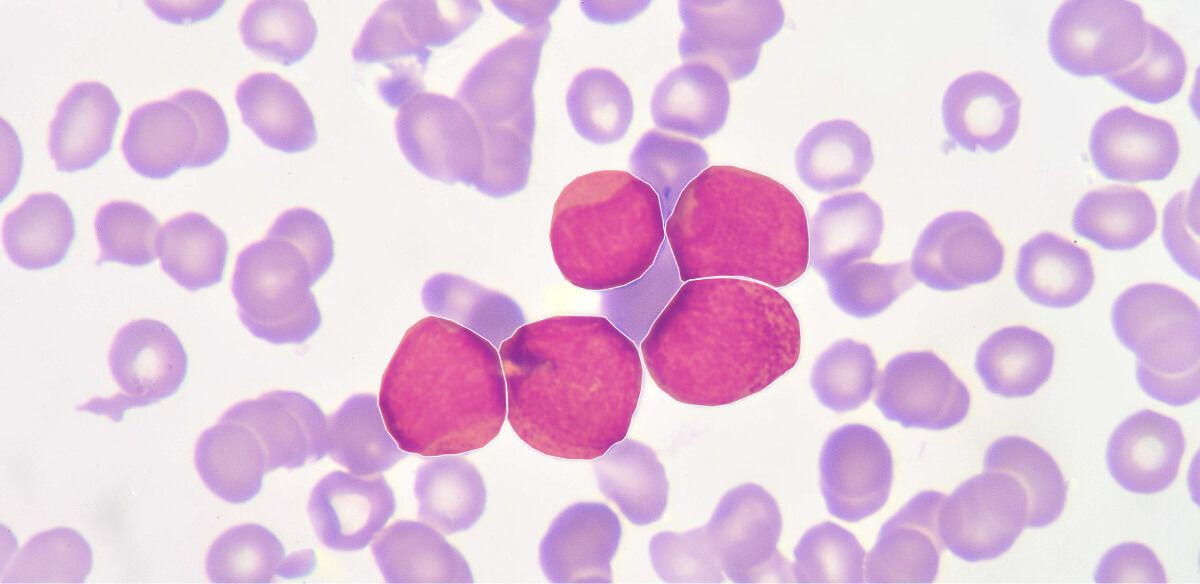

Medical Imaging: Advancing Healthcare

Photos annotation has profound applications in the medical field. For instance, annotating medical images of benign and malignant tumors using pixel-accurate techniques enables doctors to make faster and more accurate diagnoses.

medical imaging

medical imaging

Medical image annotation is used to diagnose various diseases, including cancer, brain tumors, and neurological disorders. Annotators highlight regions of interest in medical images using bounding boxes, polygons, or other appropriate techniques. The availability of large annotated datasets and advanced algorithms is empowering healthcare professionals to provide more accurate diagnoses and predictive models.

Robotics: Automation and Efficiency

While humans are developing sophisticated technologies for robotics, automating numerous human processes, there’s still a need for further assistance. Photos annotation plays a crucial role in helping robots differentiate between various objects, a capability enabled by human input through annotation.

Line annotation is also significant in robotics, aiding in distinguishing different segments of a production line. Robots rely on photos annotation for tasks such as sorting packages, planting seeds, and lawn mowing, among many others.

Autonomous Vehicles: Driving the Future of Transportation

The autonomous vehicle industry is experiencing rapid growth, driven by the increasing demand for self-driving cars. This progress is significantly enabled by data annotation techniques and labeling services. Labeled data makes objects more predictable for AI, and annotation precision becomes a key factor in data-centric model development. High-quality annotated datasets are used to train algorithms, iteratively refined, and re-annotated to ensure the desired level of accuracy for autonomous vehicles.

Object detection and object classification algorithms are fundamental to autonomous vehicles’ computer vision capabilities, enabling safe decision-making. These algorithms, trained on labeled data, allow autonomous vehicles to recognize intersections, issue emergency warnings, identify pedestrians and animals, and even take control to prevent accidents.

autonomous driving

autonomous driving

Despite the variety of photos annotation techniques, only a subset is commonly used for training datasets in this sector. Bounding boxes, cuboids, lane annotation, and semantic segmentation are primary techniques. Semantic segmentation helps vehicle’s computer vision algorithms understand and contextualize scenarios, aiding in collision avoidance.

Drone/Aerial Imagery: Expanding Perspectives

Aerial or drone imagery is transforming numerous industries. Drones collect data using sensors and cameras, which, when combined with AI applications, requires extensive photos and video annotation for training data. Aerial image annotation involves labeling images captured by satellites and drones to train computer vision models for analyzing specific domain characteristics.

The AI-powered drone industry is addressing critical challenges in sectors like agriculture, construction, environmental conservation, security, surveillance, and fire detection. Drones are particularly valuable for nature monitoring and conservation, efficiently capturing environmental data for researchers and conservationists. Drones can quickly gather data that would be time-consuming and expensive to collect manually.

drone imagery

drone imagery

Drones are used in various ways to protect wildlife and habitats, often relying on annotated data of target areas for AI training. Wildfire management is a specific example, where AI-powered drones detect fires faster than humans, enabling quicker and safer responses to prevent extensive damage.

Insurance: Streamlining Processes and Fraud Detection

The insurance industry is also significantly impacted by AI and photos annotation. Both insurance providers and customers seek rapid processes, and AI plays a crucial role in accelerating workflows. AI’s data collection and analysis capabilities expedite inspection and evidence gathering.

AI also enhances fraud prevention through behavior and pattern analysis. AI-driven risk management systems are revolutionizing insurance business models by personalizing risk assessments and effectively managing risk for new and existing insurance policies. Fraud detection applications can identify inconsistencies in applications, making it easier to detect irregular customer activities.

Wrap Up: The Indispensable Role of Photos Annotation

Artificial intelligence and machine learning are the driving forces behind modern technology, impacting industries across the board, from healthcare and agriculture to security and sports. Photos annotation is a fundamental process for creating robust and reliable machine learning models, thereby enabling more advanced technologies. The importance of photos annotation cannot be overstated.

Remember, the quality of your machine learning model is directly proportional to the quality of your training data. High-quality, accurately labeled images, videos, and other data are essential for building models that deliver exceptional results and benefit humanity.

With a solid understanding of what photos annotation is, its various types, techniques, and diverse use cases, you are now equipped to advance your annotation projects and model development. Ready to take your projects to the next level?

[