Are you concerned about a photo on Facebook that seems inappropriate? This article, brought to you by dfphoto.net, will guide you on how to report a Facebook photo effectively, ensuring a safer online experience for yourself and others. We will walk you through the reporting process, covering everything from identifying policy violations to understanding Facebook’s response.

1. What Types Of Facebook Photos Should I Report?

You should report photos that violate Facebook’s Community Standards. These standards prohibit content that includes hate speech, bullying, violence, or anything that violates their policies. Facebook photos that contain hate speech are the most common violation, according to research from the Santa Fe University of Art and Design’s Photography Department. Be sure to understand Facebook’s guidelines thoroughly, and consider bookmarking dfphoto.net for quick access to updated information on digital safety and responsible photography practices.

Here are some categories of content that violate Facebook’s Community Standards:

- Hate Speech: Attacks on people based on race, ethnicity, religion, sex, gender, sexual orientation, disability, or medical condition.

- Bullying and Harassment: Content that targets individuals with the intent to degrade or shame them.

- Violence and Graphic Content: Photos that depict violence, promote terrorism, or contain graphic content.

- Nudity and Sexual Activity: Content that is sexually suggestive, exploits, abuses, or endangers children.

- Misinformation: False or misleading information that could cause harm.

- Copyright Infringement: Unauthorized use of copyrighted material.

2. How Do I Report A Photo On Facebook Step-By-Step?

Reporting a photo on Facebook is straightforward. Begin by clicking the three dots in the upper-right corner of the photo. Select “Report photo” and follow the on-screen instructions to indicate the reason for your report.

Here’s a step-by-step guide:

- Locate the Photo: Go to the photo you wish to report on Facebook.

- Click the Three Dots: Look for the three dots (···) in the top-right corner of the photo. This icon usually indicates more options.

- Select “Report photo”: A drop-down menu will appear. Choose the “Report photo” option.

- Choose a Reason: A list of reasons will appear, such as “Hate speech,” “Bullying,” or “Nudity.” Select the reason that best describes why you are reporting the photo.

- Provide Additional Information (Optional): Depending on the reason you select, Facebook may ask you to provide more information or context.

- Submit Your Report: Once you have chosen a reason and provided any additional information, click the “Submit” or “Send” button to submit your report.

3. What Happens After I Report A Facebook Photo?

After reporting a photo, Facebook reviews it to determine if it violates their Community Standards. Facebook will take action if the photo violates their policies, such as removing the photo or suspending the account. Facebook’s team evaluates each report, and you may receive an update on the status of your report.

Here is what typically happens after you report a photo:

- Facebook Reviews the Report: Facebook’s moderation team reviews the reported photo against their Community Standards.

- Action Taken (If Necessary): If the photo violates Facebook’s policies, they will take appropriate action. This could include removing the photo, issuing a warning to the user, suspending the account, or other measures.

- Notification to the Reporter: Facebook may send you a notification about the outcome of your report. This notification will inform you whether the photo was found to violate their standards and what action, if any, was taken.

- No Action (If No Violation): If Facebook determines that the photo does not violate their Community Standards, no action will be taken. In this case, the photo will remain on Facebook.

4. How Long Does It Take For Facebook To Review A Reported Photo?

Facebook aims to review reports as quickly as possible, but the exact time can vary. Complex cases may take longer. Be patient and check for updates in your support inbox.

Here’s a general timeline and factors that affect the review time:

- Typical Review Time: Facebook typically reviews reports within 24 to 48 hours.

- Complexity: More complex cases, such as those involving legal issues or requiring additional investigation, may take longer.

- Volume of Reports: During periods of high activity, such as major events or crises, the review time may be longer due to the increased volume of reports.

5. Can I Report A Photo Anonymously On Facebook?

When you report a photo, the user who posted the photo will not know who reported it. Your report is confidential.

Here’s what you need to know about anonymous reporting:

- Confidentiality: Facebook keeps your identity confidential when you report a photo. The user who posted the photo will not be informed that you were the one who reported it.

- No Direct Notification: The reported user will not receive a notification or any information about who made the report.

- Privacy: Your privacy is protected throughout the reporting process.

6. What If Facebook Doesn’t Remove The Photo I Reported?

If Facebook does not remove the photo, it means they did not find it in violation of their Community Standards. You can explore other options, such as blocking the user. Consider seeking support from friends, family, or mental health professionals, as advised by the American Psychological Association.

Here are additional steps you can take:

- Block the User: If the content is from a specific user, you can block them. Blocking prevents them from contacting you or seeing your content.

- Unfollow the User or Page: If you don’t want to block them but don’t want to see their content, you can unfollow the user or page.

- Adjust Your Privacy Settings: Review and adjust your privacy settings to control who can see your content and who can contact you.

- Contact Facebook Support: If you believe that the decision was made in error or if you have additional information, you can contact Facebook Support for further assistance.

7. How Do Facebook’s Community Standards Apply To Photos?

Facebook’s Community Standards apply to all content, including photos. These standards prohibit hate speech, violence, nudity, and other harmful content. Meta AI is working with industry partners to identify harmful content. Facebook is committed to maintaining a safe and respectful environment for its users.

Here’s a breakdown of how the Community Standards apply:

- Prohibited Content: The Community Standards prohibit a wide range of content, including hate speech, bullying, violence, graphic content, nudity, and misinformation.

- Reporting Mechanisms: Facebook provides mechanisms for users to report content that violates these standards.

- Review Process: Reported content is reviewed by Facebook’s moderation team to determine if it violates the standards.

- Enforcement Actions: If a photo violates the Community Standards, Facebook will take appropriate action, such as removing the photo or suspending the account.

8. What Role Does AI Play In Identifying Inappropriate Photos On Facebook?

AI plays a significant role in identifying inappropriate photos. AI systems help detect hate speech, violence, and other policy violations. Meta AI is continually improving its AI tools. According to Meta, AI helps reduce the prevalence of hate speech.

Here’s how AI is utilized:

- Automated Detection: AI systems are used to automatically detect content that may violate Facebook’s Community Standards.

- Hate Speech Detection: AI is particularly effective at identifying and addressing hate speech, reducing its prevalence on the platform.

- Review Prioritization: AI helps prioritize content for human review, ensuring that the most potentially harmful content is addressed quickly.

- Content Moderation: AI assists human moderators by providing additional context and information, helping them make more informed decisions.

9. How Can I Protect Myself From Seeing Inappropriate Photos On Facebook?

You can protect yourself by adjusting your privacy settings, blocking users, and unfollowing pages. Report inappropriate content. Use Facebook’s tools to filter content and control your online experience.

Here are several strategies:

- Adjust Privacy Settings: Control who can see your posts and who can contact you.

- Block Users: Prevent specific users from interacting with you or seeing your content.

- Unfollow Pages and Groups: Stop seeing content from pages or groups that frequently post inappropriate material.

- Report Inappropriate Content: Use the reporting tools to flag content that violates Facebook’s Community Standards.

- Use Content Filters: Utilize available content filters to block specific words or phrases.

- Be Mindful of Friend Requests: Only accept friend requests from people you know and trust.

10. What Should I Do If I See A Photo On Facebook That Seems Illegal?

If you see a photo that seems illegal, report it to Facebook and consider contacting law enforcement. Preserve any evidence of the potentially illegal activity. Seek legal advice if necessary.

Here are the recommended steps:

- Report to Facebook: Immediately report the photo to Facebook using the reporting tools.

- Contact Law Enforcement: If you believe the photo depicts illegal activity, contact your local law enforcement agency.

- Preserve Evidence: Take screenshots or otherwise preserve any evidence of the photo and related information.

- Seek Legal Advice: If necessary, consult with an attorney to understand your rights and options.

11. Is It Possible To Appeal A Facebook Decision About A Reported Photo?

Yes, it is possible to appeal a Facebook decision if you disagree with their assessment of a reported photo. Facebook typically provides an option to appeal the decision directly through the notification or support inbox. You can provide additional context or information to support your appeal.

Here’s a detailed guide:

- Check Notification: After reporting a photo, check your notifications for updates from Facebook regarding the outcome of your report.

- Locate Appeal Option: If Facebook indicates that no action was taken on the reported photo, there may be an option to appeal the decision. This option is usually found within the notification or in your support inbox.

- Submit Appeal: Click on the appeal option and provide any additional information or context that supports your claim that the photo violates Facebook’s Community Standards.

- Review Process: Facebook will review your appeal and make a final decision.

- Final Decision: Facebook will notify you of the outcome of your appeal. The decision made after the appeal is typically final.

12. How Does Reporting Photos Help Improve The Facebook Community?

Reporting photos helps improve the Facebook community by removing harmful content and promoting a safer online environment. User reports are essential to the content moderation process. Each report contributes to a better, more respectful community for everyone.

Here’s how reporting photos makes a difference:

- Removal of Harmful Content: Reporting inappropriate photos leads to the removal of content that violates Facebook’s Community Standards.

- Safer Online Environment: By removing harmful content, reporting helps create a safer and more respectful online environment for all users.

- Support for Community Standards: Reporting reinforces Facebook’s Community Standards and helps ensure they are upheld.

- Improved Content Moderation: User reports provide valuable information that helps Facebook improve its content moderation processes.

- Positive Community Impact: By actively participating in reporting, users contribute to a positive and supportive community.

13. Can I Report A Photo On Facebook If I’m Not Friends With The Person Who Posted It?

Yes, you can report a photo on Facebook even if you are not friends with the person who posted it. The reporting process is available for all users, regardless of their connection to the poster.

Here’s what you need to know:

- Accessibility: The “Report photo” option is available on all photos, regardless of whether you are friends with the person who posted it.

- No Friendship Required: You do not need to be connected to the person who posted the photo to report it.

- Universal Reporting: Any user who sees a photo that violates Facebook’s Community Standards can report it.

14. What Types Of Penalties Can A Person Face For Posting Inappropriate Photos On Facebook?

A person who posts inappropriate photos on Facebook can face various penalties, including warnings, content removal, account suspension, or permanent account deletion. The severity of the penalty depends on the nature and frequency of the violations.

Here are the potential penalties:

- Warning: A first-time violation may result in a warning from Facebook.

- Content Removal: The inappropriate photo will be removed from Facebook.

- Account Suspension: Repeated or severe violations can lead to temporary account suspension.

- Permanent Account Deletion: In cases of extreme or repeated violations, Facebook may permanently delete the user’s account.

- Legal Consequences: In cases where the photo depicts illegal activity, the person may face legal consequences.

15. How Do I Report A Fake Profile That Is Posting Inappropriate Photos?

To report a fake profile posting inappropriate photos, go to the profile, click the three dots on the cover photo, select “Report profile,” and follow the instructions. Facebook will investigate the profile and take action if it violates their policies.

Here’s a detailed guide:

- Go to the Profile: Navigate to the fake profile you want to report.

- Click the Three Dots: Look for the three dots (···) on the profile’s cover photo.

- Select “Report profile”: A drop-down menu will appear. Choose the “Report profile” option.

- Choose a Reason: Select the reason that best describes why you are reporting the profile, such as “Fake account” or “Pretending to be someone else.”

- Provide Additional Information (Optional): Depending on the reason you select, Facebook may ask you to provide more information or context.

- Submit Your Report: Once you have chosen a reason and provided any additional information, click the “Submit” or “Send” button to submit your report.

16. What Resources Does Dfphoto.Net Provide For Staying Safe Online?

Dfphoto.net offers resources for staying safe online, including guides on responsible photography, tips for protecting your work, and updates on digital safety. Visit our website for more information.

Here’s how dfphoto.net supports online safety:

- Responsible Photography Guides: We provide guides on ethical and responsible photography practices.

- Copyright Protection Tips: Learn how to protect your photographic work from unauthorized use.

- Digital Safety Updates: Stay informed about the latest digital safety measures and guidelines.

- Community Support: Engage with a community of photographers who prioritize online safety and ethical practices.

17. How Can I Report Copyright Infringement Of My Photos On Facebook?

To report copyright infringement, use Facebook’s copyright reporting tool. Provide information about the copyrighted work and the infringing material. Facebook will investigate and take action if necessary.

Here’s a step-by-step guide:

- Find the Infringing Photo: Locate the photo on Facebook that you believe infringes your copyright.

- Access the Copyright Reporting Tool: Go to Facebook’s copyright reporting tool.

- Provide Information: Fill out the form with details about your copyrighted work, including its title, description, and proof of ownership.

- Identify Infringing Material: Provide specific information about the infringing photo on Facebook, including its URL and location.

- Submit Your Report: Submit the completed form to Facebook for review.

- Review Process: Facebook will review your report and take action if they find that copyright infringement has occurred.

18. What Is Facebook’s Policy On Photos That Depict Violence?

Facebook’s policy prohibits photos that depict violence, especially if they celebrate or promote harm. Such photos will be removed, and the poster may face penalties.

Here’s what you need to know:

- Prohibition: Facebook prohibits photos that depict violence, particularly if they celebrate or promote harm.

- Content Removal: Photos that violate this policy will be removed from the platform.

- Penalties: Users who post violent content may face warnings, account suspension, or permanent account deletion.

- Context Matters: Facebook considers the context of the photo when determining whether it violates the policy. For example, photos of newsworthy events may be allowed with appropriate context.

19. How Can I Report A Photo That Is Being Used To Bully Or Harass Someone?

To report a photo used for bullying or harassment, click the three dots on the photo, select “Report photo,” and choose the “Bullying and harassment” option. Provide details about how the photo is being used to harass the individual.

Here’s a detailed guide:

- Locate the Photo: Go to the photo that is being used for bullying or harassment on Facebook.

- Click the Three Dots: Look for the three dots (···) in the top-right corner of the photo.

- Select “Report photo”: A drop-down menu will appear. Choose the “Report photo” option.

- Choose “Bullying and harassment”: Select the “Bullying and harassment” option from the list of reasons.

- Provide Details: Provide specific details about how the photo is being used to bully or harass the individual.

- Submit Your Report: Click the “Submit” or “Send” button to submit your report.

20. What Are The Best Practices For Reporting A Photo On Facebook?

The best practices for reporting a photo on Facebook include providing accurate details, selecting the most appropriate reason for reporting, and including any relevant context. Follow Facebook’s guidelines and be respectful in your report.

Here’s a summary of best practices:

- Provide Accurate Details: Include accurate and specific information about the photo and why you are reporting it.

- Select the Right Reason: Choose the reason for reporting that best describes the violation.

- Include Context: Provide any relevant context that helps explain the situation.

- Follow Guidelines: Adhere to Facebook’s reporting guidelines and policies.

- Be Respectful: Maintain a respectful and professional tone in your report.

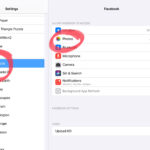

21. How Do I Report A Facebook Photo On My Mobile Device?

To report a photo on your mobile device, open the Facebook app, navigate to the photo, tap the three dots in the upper-right corner, select “Report photo,” and follow the instructions.

Here’s a detailed guide:

- Open Facebook App: Launch the Facebook app on your mobile device.

- Navigate to the Photo: Find the photo you want to report.

- Tap the Three Dots: Tap the three dots (···) in the top-right corner of the photo.

- Select “Report photo”: A menu will appear. Tap the “Report photo” option.

- Choose a Reason: Select the reason that best describes why you are reporting the photo.

- Provide Details (Optional): Provide additional information or context if prompted.

- Submit Your Report: Tap the “Submit” or “Send” button to submit your report.

22. Can I Report Multiple Photos From The Same User At Once?

While Facebook doesn’t offer a direct way to report multiple photos simultaneously, you can report each photo individually following the standard reporting process.

Here’s the workaround:

- Report Each Photo: Go through each photo individually and follow the reporting steps for each one.

- Provide Consistent Details: When reporting each photo, provide consistent details and context to help Facebook understand the pattern of violations.

- Use Additional Information: In the additional information section of each report, mention that you are reporting multiple photos from the same user due to similar violations.

23. How Does Facebook Handle Reports Of Photos That Promote Self-Harm?

Facebook takes reports of photos that promote self-harm very seriously. They have specific policies and resources to address such content. They work with mental health organizations to provide support to users who may be at risk.

Here’s what happens when a report is made:

- Immediate Review: Reports of photos promoting self-harm are given immediate attention.

- Content Removal: Photos that violate the policy are removed from the platform.

- User Support: Facebook provides resources and support to users who may be at risk of self-harm, including links to mental health organizations.

- Law Enforcement Notification: In cases where there is an imminent risk of harm, Facebook may notify law enforcement.

24. What Is The Difference Between Reporting A Photo And Blocking A User On Facebook?

Reporting a photo flags it for review by Facebook, while blocking a user prevents them from interacting with you. Reporting addresses policy violations, while blocking manages personal interactions.

Here’s a breakdown:

- Reporting a Photo: Flags the photo for review by Facebook’s moderation team. If the photo violates Facebook’s Community Standards, it may be removed, and the user may face penalties.

- Blocking a User: Prevents the user from interacting with you on Facebook. They will not be able to see your posts, send you messages, or add you as a friend.

25. How Do I Report A Photo That Is Being Used For Identity Theft?

To report a photo being used for identity theft, report the profile impersonating you or someone you know. Use the “Pretending to be someone” option and provide details to Facebook.

Here’s a step-by-step guide:

- Go to the Fake Profile: Navigate to the profile that is using the photo for identity theft.

- Click the Three Dots: Look for the three dots (···) on the profile’s cover photo.

- Select “Report profile”: A drop-down menu will appear. Choose the “Report profile” option.

- Choose “Pretending to be someone”: Select the “Pretending to be someone” option from the list of reasons.

- Specify Who Is Being Impersonated: Indicate whether the profile is pretending to be you or someone you know.

- Provide Details: Provide any additional information that helps Facebook understand the situation.

- Submit Your Report: Click the “Submit” or “Send” button to submit your report.

26. What If I Am Being Targeted By A Group Posting Inappropriate Photos About Me?

If you’re targeted by a group posting inappropriate photos, report each photo, block the group members, and adjust your privacy settings. Contact Facebook support for further assistance.

Here are the recommended steps:

- Report Each Photo: Report each photo individually using the reporting tools.

- Block Group Members: Block the members of the group to prevent them from further harassing you.

- Adjust Privacy Settings: Review and adjust your privacy settings to control who can see your content and who can contact you.

- Contact Facebook Support: Reach out to Facebook Support for additional assistance and guidance.

- Document Everything: Keep a record of all instances of harassment, including screenshots and dates.

27. How Does Facebook Protect Children From Inappropriate Photos?

Facebook has strict policies against content that exploits, abuses, or endangers children. They use AI and human review to detect and remove such content. They also work with law enforcement to address child exploitation.

Here’s how Facebook protects children:

- Strict Policies: Facebook has strict policies against content that exploits, abuses, or endangers children.

- AI and Human Review: They use AI and human review to detect and remove such content.

- Reporting Mechanisms: They provide mechanisms for users to report content that violates these policies.

- Law Enforcement Collaboration: Facebook works with law enforcement to address cases of child exploitation.

28. What Should I Do If I Suspect A Photo On Facebook Is Being Used For Human Trafficking?

If you suspect a photo is used for human trafficking, report it to Facebook immediately and contact law enforcement. Provide as much detail as possible.

Here’s what you need to do:

- Report to Facebook: Report the photo to Facebook immediately using the reporting tools.

- Contact Law Enforcement: Contact your local law enforcement agency to report your suspicions.

- Provide Details: Provide as much detail as possible about why you suspect the photo is related to human trafficking.

- Preserve Evidence: If possible, take screenshots or otherwise preserve any evidence of the photo and related information.

29. How Can I Educate Others About Reporting Inappropriate Photos On Facebook?

Educate others by sharing this article, discussing Facebook’s Community Standards, and demonstrating how to report content. Promote responsible online behavior.

Here are some ways to educate others:

- Share This Article: Share this article with friends, family, and colleagues to educate them about reporting inappropriate photos on Facebook.

- Discuss Community Standards: Talk about Facebook’s Community Standards and why they are important.

- Demonstrate Reporting: Show others how to report content on Facebook.

- Promote Responsible Behavior: Encourage responsible online behavior and ethical use of social media.

- Organize Workshops: Conduct workshops or seminars to educate people about online safety and reporting mechanisms.

30. How Can I Stay Updated On Facebook’s Policies Regarding Inappropriate Photos?

Stay updated by regularly reviewing Facebook’s Community Standards and following official announcements. Visit dfphoto.net for updates and resources on digital safety and photography.

Here are the best ways to stay informed:

- Review Community Standards: Regularly review Facebook’s Community Standards to stay informed about their policies.

- Follow Official Announcements: Keep up with official announcements from Facebook regarding policy changes and updates.

- Visit Dfphoto.net: Visit dfphoto.net for updates and resources on digital safety and photography.

- Subscribe to Newsletters: Subscribe to newsletters and publications that cover social media policies and online safety.

Reporting inappropriate photos on Facebook is a crucial step in maintaining a safe and respectful online community. By understanding the types of content to report, following the reporting process, and staying informed about Facebook’s policies, you can contribute to a better online experience for yourself and others. Remember to visit dfphoto.net for more resources on responsible photography and digital safety.

Ready to enhance your photography skills and explore a supportive community? Visit dfphoto.net today to discover insightful tutorials, stunning photo collections, and connect with fellow photography enthusiasts in the USA. Let’s create a safer and more inspiring online environment together. Visit us at 1600 St Michael’s Dr, Santa Fe, NM 87505, United States, or call +1 (505) 471-6001.

FAQ: Reporting Facebook Photos

1. Can I report a Facebook photo without having a Facebook account?

No, you typically need a Facebook account to report a photo. This is because Facebook requires a registered user to initiate and track the report.

2. Will the person who posted the photo know that I reported them?

No, Facebook keeps your identity confidential when you report a photo. The person who posted the photo will not be informed that you were the one who reported it.

3. How does Facebook decide whether a photo violates its Community Standards?

Facebook reviews reported photos against its Community Standards, considering factors such as the content, context, and potential harm.

4. What happens if I repeatedly report photos that don’t violate Facebook’s policies?

Repeatedly reporting photos that don’t violate Facebook’s policies may result in warnings or restrictions on your reporting privileges.

5. Can I report a photo that I disagree with, even if it doesn’t violate Facebook’s policies?

While you can report any photo, Facebook will only take action if it violates its Community Standards. Disagreeing with a photo is not a valid reason for removal unless it also violates these standards.

6. Is there a limit to how many photos I can report on Facebook?

There is no specific limit, but Facebook may monitor and restrict accounts that excessively report content without valid reasons.

7. How can I check the status of a photo I’ve reported?

You can usually check the status of a reported photo in your Facebook support inbox or notifications.

8. What should I do if I think Facebook made a mistake in reviewing a reported photo?

If you believe Facebook made a mistake, you can appeal the decision through the provided channels, typically found in the notification or support inbox.

9. Does Facebook have different policies for different types of inappropriate photos?

Yes, Facebook has specific policies for different types of inappropriate photos, such as those depicting hate speech, violence, nudity, or bullying.

10. How does reporting photos help protect vulnerable individuals on Facebook?

Reporting photos helps protect vulnerable individuals by removing harmful content, promoting a safer online environment, and supporting Facebook’s Community Standards.